In today’s podcast episode, we have invited Phaedra Boinodiris, Trust in AI Business Transformation Leader at IBM. We talked about Phaedra’s fascinating journey from gaming to AI, what keeps her up at night, and her plan for increasing the inclusion of women in the male-dominated tech industry.

Mia Dand: I’m excited to have you with us today. Your background is exceptionally impressive. You have focused on inclusion and technology since 1999. You have founded or acted as an advisor for multiple digital inclusion programs. You have co-founded womengamers.com, which offered the first national scholarship program for women to pursue degrees in game design and development, all really impressive things. How did you get to IBM from gaming?

Phaedra Boinodiris: It’s a long story but in essence, as you mentioned, my sister and I co-founded this web portal back in the 90s, which was focused on women who play computer and console games. We wrote game reviews and articles about the industry and eventually started a scholarship. Then there was a big shift in the games industry where suddenly game publishers were talking about going after the mass market instead of the hardcore gamers. There were a lot of conversations about who the casual gamer was so I decided that I wanted to go back to business school to pursue an MBA so I could learn more about what it would take to go after venture capital funding and launch our game studio. While I was there, I ended up launching several different case competitions–this is when a company will go to a business school with a real-life challenge, and the students have overnight to come up with an idea and then pitch it the next morning.

So I was on my sixth one and IBM was sponsoring a challenge around Business Process Management. They were looking for innovative ways of explaining Business Process Management to non-technical executives and I had no idea what it was at the time. They handed each team a stack of papers about three inches thick and they said, “Read these case studies.” While I was reading them I was thinking, ”Wow. This is a strategy game, right?” You could tweak different business rules, seeing how it affects your broader ecosystem. You could have competing models. You could have a multiplayer version for a model where people could collaborate.

I ended up pitching this idea and unbeknownst to me, one of the VPs for Strategy at the time was a judge. She pulled me aside and said, “Hey, I want to fund this idea right now. Can you make it for me in three months?”

It was an interesting segue into the world of IBM. I ended up interning at IBM while I was getting my MBA full time and then I was asked to lead our first serious games program, which is when you use video games to do something other than entertaining. And it was through that work and my volunteer work as well– I’ve always been very invested in volunteering in the K-12 space–that I’ve continued to work for IBM.

What interested me was the intersection of Artificial Intelligence (AI) and play. I then began to do more and more work with AI in games and it was tremendously fun. That was my segue into my current role now as IBM Consulting’s Trustworthy AI Leader.

Mia Dand: That is such an incredible story. From a business school case study to leading AI business transformation at a multinational company.

Phaedra Boinodiris: Yeah, it was interesting. Some of the catalysts that spurred me to be more focused on AI ethics was that several years ago I just got more and more horrified by what I was reading in the news and just thought, that’s it I want to learn everything I possibly can about this space and I found an incredible Ph.D. program, which I’m currently about halfway through my dissertation and it has opened the aperture for me to be able to network with like-minded people and to be looking at this space holistically. It’s something that I’m truly passionate about.

Mia Dand: That’s fantastic because it’s such a critical area that should be on everyone’s minds, especially those in the business and the tech world who are deploying a lot of these technologies and companies who are adopting these technologies to serve their customer base. In your current role, you are IBM’s Trust in AI Business Transformation Leader, which is a client-facing role. What is the main concern that you’re hearing from your clients and what is keeping you up at night?

Phaedra Boinodiris: Well, three things keep me up at night. First is climate change. That certainly keeps me up at night as it does many people. The second thing that keeps me up at night is that so many people have no idea that an AI model is being used to make decisions that could directly affect them, whether it’s the percentage of the interest rate they get on their loan or whether their kid gets into that college that they applied for, the list goes on and on.

The third thing is I’m worried that people may assume that because it’s this AI model that doesn’t have “fallible human bias” the decisions made by the AI are morally or ethically, squeaky clean. They assume that it is not a person so we don’t need to worry about it.

I also find there’s a lot of conversation with our customers about things like concern over things like litigation. They’re very worried about whether their model has bias and how they might be able to scale AI. But I would think even more importantly about how they get people to trust the results of their model. What I found by studying this space is that there’s a misconception of it being a technological challenge when in fact it’s more of a social-technological challenge. And because it’s a socio-technological challenge, it must be addressed holistically.

Mia Dand: The issues that you mentioned are keeping a lot of us up at night. I’m glad there are smart people out there who are also looking at the space and are asking the right questions. I’m also glad to hear that there are organizations and folks like yourself who are paying attention to how we can plan for a more ethical future.

You mentioned “socio-technological challenge”. Since you work with different companies and clients, is this cultural shift in your opinion needed at large companies as they’re deploying these technologies? How can they be more thoughtful and make sure that what they’re deploying out there is ethical?

Phaedra Boinodiris: When addressing any kind of social-technological challenge, you must approach it holistically, which means people process tools. We can’t just imagine that by simply installing the XYZ tool you can suddenly be assured that everything is going to be squeaky clean. You have to consider the challenge holistically.

I mention this in earlier conversations with customers that are very concerned. For example, about the risk of being non-compliant. Oftentimes they happen in the same organizations. It’s culture. They will be the first to tell you that they have trouble attracting women and minorities. They look at their teams of data scientists and they all look quite uniform. There isn’t diversity, there isn’t inclusivity.

When thinking about the culture of an organization and having conversations about diversity, equity, inclusivity; it’s important to think, not only about gender, race, and ethnicity but also what people’s worldviews are, what kinds of skill sets they bring because this is not just a challenge for data scientists alone. When people reference practitioners, it has to be a much wider set of roles and skill sets that need to come together and work together to solve these kinds of challenges.

One of the things that we’ve done is to get uncommon stakeholders, to work together and tackle some of the larger challenges around AI ethics including adopting frameworks for systemic empathy.

How do you get people to think systematically about empathy?

Given a particular use case, how do you imagine based on not just who’s in the room, but who’s not in the room, who’s not being considered as an end audience?

What are potential primary, secondary and tertiary effects?

Which of these effects might be harmful?

Given the values of that particular organization, what are the rights of the end-users and how would you mitigate to protect those individuals?

Again, that kind of framework is something that we should be introducing, not just within a professional environment. Certainly not just for data scientists, but across roles, across different practitioners coming together to work on this. The more diverse the group, the better.

This is also something we need to be introducing to young people or those early in their careers. Higher education institutions to high school kids to middle school kids because we’re not talking about coding, we’re just talking about getting people to consider what is apparent and not apparent and how to design AI to protect people from potential harm.

Mia Dand: I couldn’t agree more on every point that you made and there’s a lot to unpack. One is this inclusion of, as you said, uncommon voices and highlighting how critical it is to have diverse voices in this conversation. You mentioned systemic empathy, which I’m so intrigued by along with your point in making sure that we start early rather than later. We should be starting at the beginning as they’re getting trained or as they enter the workforce.

Let’s just talk about two points that you’ve raised here. Let’s start with the first one, which is about the culture of engineering at companies. Cultural change is challenging. You have a hard job. How do you ensure that the engineering team sticks to this culture change that you’ve identified? And also, are there any specific governance tools that you use for designing and deploying AI responsibly?

Phaedra Boinodiris: The hard part is changing people’s behaviors. It’s not as much of the tooling and the tech, as it is understanding people and getting people to recognize the value of other people. Some examples of what we’ve been doing apart from messaging on the importance of diversity, equity, and inclusivity is putting forth an effort to measure the level of diversity inclusivity on these teams.

How many women are on your data science team?

How many minorities are on your teams of data scientists?

What is the makeup of your ethics board, your AI ethics board look like?

How many women? How many minorities? How many neurodiverse people?

What about their age? Et cetera.

Another thing that we’ve done in addition to that focus is we’ve crafted something called the Scale Data Science Method, which is an extension of Crisp-DM that in essence, offers AI governance across the AI model lifecycle.

So imagine I mentioned all these roles, right? The fact that you’ve got to have data scientists working with industrial-organizational psychologists, working with designers, working with communication specialists, and so on; altogether on the AI model lifecycle to make sure that you’re curating it responsibly.

The Scale Data Science Method tool merges data science best practices with project management with design frameworks with AI governance. All across the board, all of this content together. It demonstrates where you are in the life cycle, where your team is, who you need to be talking to, what are the requirements, what documentation do you need, what you need to plan for next, and who you need to do this with. It’s similar to a Weight Watchers Coach but instead of telling you to write things in your food diary or make sure you weigh yourself, it’s telling you where you are on the AI model lifecycle and what you need to do with a dashboard so you can work together as a team. Think about constant reminders to work together with different kinds of people at different stages of the AI model lifecycle to make sure that indeed you’re curating it responsibly.

Mia Dand: I love this focus on the multi-disciplinary approach to AI model development and deployment. Couldn’t agree more. We hear a lot of talk in this space about how we need to include diversity but your approach sounds comprehensive. You’re walking the walk with tools, tracking, and dashboards. Folks can see for themselves how they measure their success and progress.

Speaking of progress, there is one dimension of diversity that is constantly talked about, and yet the progress has been very slow and that is gender diversity within the tech industry. You have been at the forefront of this issue, you’re a pioneer in this space. You won the United Nations’ Women — Woman of Influence in STEM and Inclusivity Award in 2019. I would like to hear your thoughts on what is the most effective way for us to increase the inclusion of women in this industry where there’s a lot of talk, but the progress has been dismal just based on the statistics and the inclusion of women in this space.

Phaedra Boinodiris: I have a lot to say about this because I have gone on the journey where I’ve had different ideas and I’ve tried different things, but the latest version of my approaches is measuring what we want to see more of and then rendering it transparent.

As an example, just this past year alone, there have been some horrifying research studies about entrepreneurs. The number one factor for a venture capitalist group to fund an entrepreneur is not whether they successfully launched other businesses. It’s not what their idea was or is for their business. It is their gender. That is the number one determining factor of whether an entrepreneur gets funded, and thinking about this–and again about previous renditions of it–you can’t be what you can’t see.

There needs to be an onus on women getting out there more and doing this and doing that instead, I’m starting to pivot to saying yes and we need to measure again how many women, how many minorities, how many historically underrepresented groups are on these teams are getting funded as entrepreneurs, are on the AI ethics board, et cetera, such that when efforts like the ESG frameworks get rolled out that those kinds of measurements can be rendered transparent to potential shareholders.

If I’m looking to buy shares of stock in an interesting company then I want to make sure it’s doing good in the world. Whether it’s for society, whether it’s for the planet. If those measurements are rendered transparent then I, as a potential shareholder, would be able to find these groups quicker.

I’m hoping there is a pivot for these ESG frameworks to start to do this also with the use of artificial intelligence and measuring things like DENI within those groups of technologists curating this technology. Does that make sense?

Mia Dand: Absolutely. That’s such a smart approach because part of it is a cultural shift–changing people’s attitudes to its inclusion of women and not treating them as lesser. I like what you said about having measurable data and providing and using that for decision-making because right now, what we see is used to make decisions, and I think the way we measure success needs to change. That will only change if you start including those metrics and that data to include DEI metrics and make those actionable.

Phaedra Boinodiris: If we can render it transparent to the market and then let the market decide. Like by giving consumers information about who is doing this kind of measurement and who is curating AI responsibly, and that has gone through governance and audits on fairness, explainability, robustness, transparency, and shrink people’s data privacy–the Five Pillars–then we’re more empowered to make better decisions as consumers on who we want to engage with.

Mia Dand: Absolutely! Let people put their money where their values are. Present it to them and say, this is the data, these are the companies doing well, and these are the ones who are walking the walk. Then let the market decide where they want to put their funds and money. I absolutely agree!

Lately, we’ve seen a lot of headlines about just ethical transgressions in the Big Tech and there’s just a level of cynicism that’s accompanying a lot of the news that comes out of companies today and for a good reason because we’re seeing so many revelations coming out about what companies are doing behind the scenes so there’s a certain amount of doom and gloom in the industry and it seems like a very dystopian world that we’re living in right now. For folks who feel like they’re powerless and they’re not in these rooms where the decisions are being made or if they care about ethics and inclusion and AI, how would you suggest that they get involved and how can they make a difference in their roles?

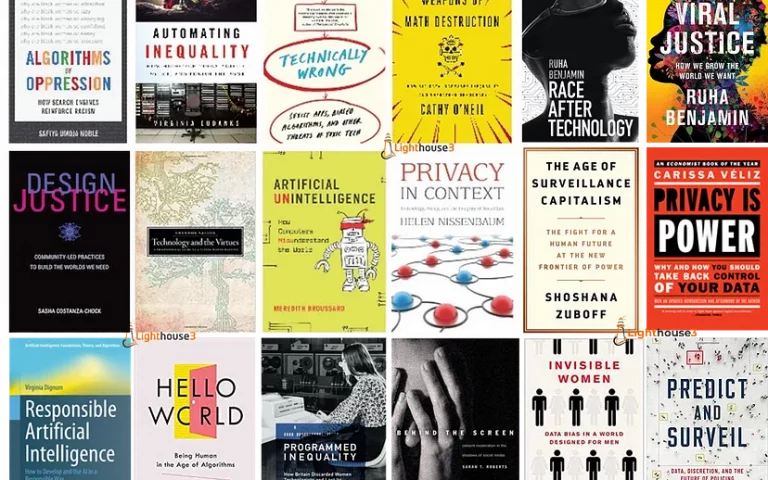

Phaedra Boinodiris: One thing that I advocate for people who don’t feel like they’re in positions of power to do is to double down on education. To educate others as much as possible, because I find it astonishing and heartbreaking that so few people can get this kind of learning today. Typically it’s those either professionals or higher-ed students who self categorize as coders or data scientists or machine learning scientists who get to take classes and things like Data Ethics or AI and Ethics and I find it crazy. Given how AI again is being used to make decisions that directly affect every one of us, why is this knowledge not being proliferated to a lot more different kinds of people? No matter what you want to be when you grow up, if you want to be a fashion designer, if you want to be the head of agriculture for your region, artificial intelligence is making a tremendous impact in those spaces. It’s important to understand the fundamentals such as the fairness of algorithms, explainability, robustness, et cetera.

You can advocate getting this kind of knowledge into more and more hands. As I mentioned earlier, into younger people’s hands. Advocating for championing this kind of education to be included in high schools and middle schools. Go back and give talks there, invite others to give talks to your Alma Mater or the high school or middle school that you graduated from, or that your kids go to. I’m on the advisory board for a local children’s science museum here in my area and I was invited to demo at a kid’s coding event. I knew I wanted to introduce younger kids to the idea of explainable and transparent AI.

What could be a fun way of introducing this to kids? I worked with a local high school and we co-created an AI-powered Harry Potter Sorting Hat. You put the sorting hat on your head in the world of Harry Potter and it uses magic that determines which Hogwarts House you were in and the lips from the hat move and it belts out, “Ravenclaw’’ or a “Hufflepuff.” We made this hat, we’re in essence you put the hat on your head and of course, instead of magic, we had a microphone embedded in the hat. It was hooked up to a laptop that had a processor and the ability to curate and categorize what people said into different Hogwarts Houses. They would say a sentence and then it would use NLP to determine which Hogwarts House using the voice from the movie.

I rigged the hat such that if any of my kids were to put it on their heads, it would put them into Slytherin. I knew that they would despise it. My daughter was 15 at that time so she gets up on stage with me and she puts the hat on her head and she says something that I guessed she would say, and immediately the hat says, “Slytherin”. She turns to me and crosses her arms, glares at me and she says, “Mom, you rigged the hat.” And I said, let this be a lesson to you, “Never trust an AI that’s not fully transparent and explainable. You should be able to ask the hat what data was used to put you into that Hogwarts house.”

It’s a funny thing about the use of pop culture as a means to teach some of these more salient ideas around things like trust, transparency, and explainability for AI. I think borrowing something from the world of Harry Potter certainly resonated with her and with the younger audience members there at the time. But anyway, that’s my food for thought.

Mia Dand: I love it! How creative, how smart! This is something that’s going to stay with these children for the rest of their lives.

Many are offering AI as this technical jargon. AI does impact all of us and yet the people making decisions, people who are in the know are just a handful of technical folks when it should be proliferated as part of education. I love your approach of starting them young and distributing information in whatever form that they’re able to consume it and that sticks with them. Love every part of this and that story is going to stick with me for a long time.

Phaedra Boinodiris: I just started penning a kid’s book on this subject of AI ethics. So I have no idea what I’m doing–full transparency–but I think it’s important. It’s been super fun. Just thinking about metaphors and how to introduce these concepts in a really fun way. So wish me luck!

Mia Dand: I cannot wait to read it. This is so smart. Good luck, not that you need any luck. Your approach to AI ethics and the way of disseminating it, making it stick with individuals and younger people, as well as within a large corporation has been so inspiring, Phaedra. I have to say, I’ve enjoyed this conversation with you so much.

Phaedra Boinodiris: I appreciate that. Mia! Any chance I can get to be able to advocate for more knowledge in this space, especially again, with respect to the more holistic approach and thinking about who gets to work on this, who gets to think about this. Just thinking about it with respect to socio-technological challenges, I think has made a difference to me and how I think about this space. Thank you for allowing me to share.

Mia Dand: It’s been an absolute pleasure. I do feel that the future of AI needs to be more diverse and more inclusive. As you said, it needs to be accessible to a broader range of folks than it is today. Thank you so much again for joining us.

Join us again next week for our conversation we will explore the different dimensions of data privacy across the entire data and AI life cycle with Mukta Singh, Product Management Executive — Data and AI at IBM.