Today, we get to learn about Ravit Dotan’s start in the AI space and the roles she’s been in throughout her journey. She also discusses some of the issues she faces on a daily basis and shares how we can build a healthy culture that is inclusive of people from all backgrounds and where the challenges are when trying to do so.

This interview is part of Women in AI Ethics (WAIE)’s “I am the future of AI” campaign launched with support from the Ford Foundation and Omidyar Network to showcase multidisciplinary talent in this space by featuring career journeys and work of women as well as non-binary folks from diverse backgrounds building the future of AI. By raising awareness about the different pathways into AI and making it more accessible, this campaign aspires to inspire participation from historically underrepresented groups for a more equitable and ethical tech future.

Can you share an incident that inspired you to join this space?

I fully joined the AI ethics space in 2020. I did research in AI ethics before that year (during my PhD), but the events of 2020 inspired a pivot in my work. I was especially influenced by that year’s social and environmental upheavals, including the pandemic, the BLM protests, the massive fires in Australia, and a war in my home country, Israel. It was all just too much. I felt cooped up in an ivory tower, with little impact on what was going on outside. My mindset shifted. My top professional priority became using my skills for social good, and I realized that the best way for me to do that is to push AI ethics forward in the industry.

How did you land your current role?

I landed all my roles in AI ethics through networking. Once I realized I was interested in integrating with the industry, I started reaching out to people whose roles intrigued me. I had many, many of these conversations (I stopped counting after about 200). They helped me identify needs, and I started working on projects to address them. I shared my insights publicly on LinkedIn, and my networking gradually revolved around my projects. I was able to speak to pressing needs, had a conviction about how to address them, and had a history of work I could show to illustrate my approach. As a result, I met more like-minded people who saw how I could contribute to their organization.

What kind of issues in AI do you tackle in your day-to-day work?

The issue I come across the most is fairness. Fairness includes mitigating unintended biases by ensuring the AI performs equally well for everyone, especially people in marginalized social groups based on gender, ethnicity, disability status, and so on. Some think that fairness issues only arise from bias in data collection. That is a misconception. Other design choices matter too. For example, allowing AI to generate images from prompts that contain racial slurs is offensive and can reinforce social stereotypes.

If you have a non-traditional or non-technical background, what barriers did you encounter and how did you overcome them?

I feel that my non-technical background is a strength. If anything about it held me back, it was only insecurities caused by my misconception that technical backgrounds are superior. The trick is understanding the field’s needs and how your skills can serve these needs. For example, one pressing need is to understand AI’s social, political, and environmental consequences. A background in social science, history, or philosophy can be very helpful for that purpose. Another pressing need is awareness raising. A background in communication, art, or translation can be very helpful. In my case, I identified the needs that I could help with by networking. Talking with people helped me see the gaps that I could help fill.

Why is more diversity — gender, race, orientation, socio-economic background, other — in the AI ethics space important?

Much of AI ethics is about noticing social dynamics. Our different backgrounds make different aspects of social dynamics more salient to us. Diversity in social positions is, therefore crucial for AI ethics. Without it, our collective work would fail to recognize how AI impacts our world, effective and ineffective ways to address AI harms, and ideas for how to use AI for social good.

What is your advice to those from non-traditional backgrounds who want to do meaningful work in this space on how to overcome barriers like tech bro culture, lack of ethical funding/opportunities, etc.?

Find a project that you’re passionate about and start working on it, even if you only have a little time to invest in it. Gradually hone your skills as you work on this project. Learn what you need to learn, even if it’s outside your comfort zone. But make sure you’re not learning as a form of procrastination or just out of insecurity. Talk to people about your project until you find people you enjoy talking about it with. Build good relationships with them. They can support you — and you can support them. Funding and job opportunities that are a good fit for you may come your way, too.

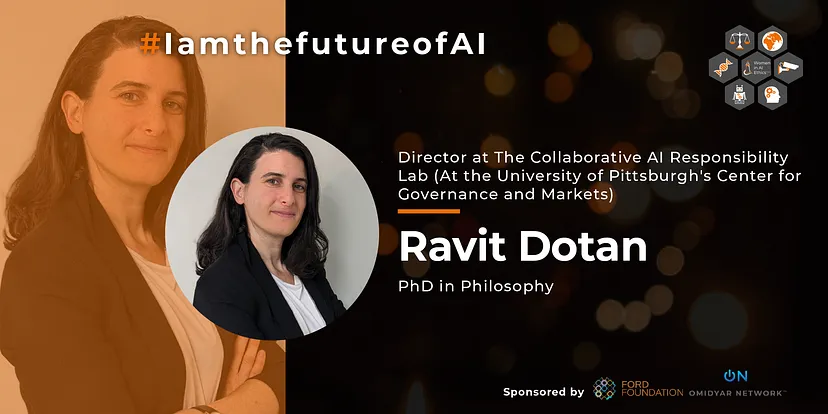

Ravit Dotan is a tech ethicist specializing in data technologies such as AI.

She focuses on supporting tech companies, investors, and procurement teams in developing responsible AI approaches.

Her recognition includes being named as one of the 100 Brilliant Women in AI Ethics for 2023 by Women in AI Ethics and receiving a 2022 “Distinguished Paper” Award from FAccT, the top AI ethics conference.

Ravit’s work has also been featured in publications such as the New York Times, TechCrunch, and VentureBeat.

She earned her Ph.D. in Philosophy at UC Berkeley.

Connect with her on LinkedIn.